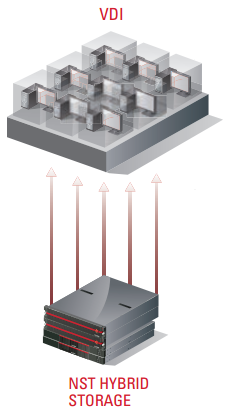

Virtual Desktop Infrastructure

NST Hybrid Storage Appliance for the Virtual Desktop

VDI is no longer a new concept in data centers. However, best practices around this technology still vary today, especially when it comes to selecting the right storage platform.

VDI is no longer a new concept in data centers. However, best practices around this technology still vary today, especially when it comes to selecting the right storage platform.

Server virtualization has made it possible to bring desktops into the centralized data center infrastructure making it possible to make the most ef cient use of technology resources. Virtual Desktop Infrastructure or VDI is de ned as the process of running user desktops inside a virtual machine that, in turn, run on virtualized servers that are hosted in the datacenter.

The Virtual Desktop Infrastructure can bring the same bene ts of server virtualization to the management of desktops with simpli ed administration, simpli ed management, reduced operational costs and demonstrated reliability. Prior to designing a VDI solution, businesses need to be clear on their objective and ask themselves the following questions:

- What is the problem I am trying to solve?

- Why do I believe VDI will help solve this problem?

- Do I understand the impact VDI will have on my data center storage?

The answers to the rst two questions may be nancially driven or address desktop management challenges. The business driving factors will vary, but many VDI deployments have failed or stalled because businesses have not fully assessed the impact that the consolidation of compute and storage resources has on the service level a desktop user expects.

This white paper focuses on the importance of selecting the right storage for your VDI deployment, so that you achieve the highest level of bene t from your investment.

Let's start with what we need to consider

- Does the cost of deploying VDI outweigh the bene ts – is there an ROI that can be achieved? Some businesses over provision storage to meet their performance and capacity requirements, which in turn can increase capital and operational expenses. The point here is, more disks = more money. Over provisioning also increases the overall datacenter footprint which can increase power and cooling expenses.

- When consolidating standard desktops into virtual desktops, it is important to have a basic understanding of the IO workload differences. A standard Windows desktop produces a random read/write workload while a single virtual desktop produces more of a sequential workload to the hypervisor. However, when running hundreds of virtual desktops, the workload from the hypervisor to the storage becomes random. These performance characteristics have limited most legacy based storage arrays for use as back-end storage for VDI.

- Resource challenges can also end a VDI deployment before it even has a chance to start. Some businesses have found that a VDI project can easily consume IT resources, so finding a storage solution that is easy to deploy and easy to manage will allow for a faster VDI implementation.

- It can also be difficult to determine how large a VDI deployment can grow. For example, a VDI design meant for 1,000 seats, will more than likely not meet the needs of a 5,000 seat solution. It is important to have a scalable storage solution that can grow with the business.

De-Mystifying Storage for the Virtual Desktop

There are two important factors that are critical to the success of any virtual desktop implementation, and both are directly impacted by the storage system involved. These are:

- Performance requirements for each virtual desktop

- Capacity requirements for each virtual desktop

These two variables, performance and capacity, are not mutually exclusive of one another. Design and behavior of virtual desktop deployments have implications for each.

Determining the performance required for each virtual desktop is a fairly simple calculation. First calculate the number of IOPs required based on the number of virtual desktops deployed. The rule of thumb for virtual desktop performance ranges from 5 IOPS per light user, 10 IOPs per moderate user to 15-20 IOPs for a heavy user. It is common to engineer for peak performance times. In the case of VDI, it is not improbable that all desktops boot up at the same time causing what is known as a boot storm. However the results of provisioning solely on peak performance leaves businesses with an over-provisioned costly storage. Keep in mind, if the VDI storage system is going to support other applications simultaneously, those performance requirements must be factored in as well.

Performance is only one half of the equation for most companies. Capacity per virtual desktop is also critical, along with the cost per GB. For most companies the economics must make sense, otherwise the VDI project is a non-starter. The objective is to obtain the right balance between price and performance. This is especially true with virtual desktop applications because user storage is being moved from lower cost, local disks to a centralized enterprise-class storage system.

One of the ways IT administrators try to reduce capacity requirements is to create a master image for all desktops. An image includes the operating system and applications and is meant to be shared by all virtual desktops. While using images is recommended, they can result in a large amount of read requests from all virtual desktops during boot up and login.

When sizing for capacity, keep in mind that not all storage is created equally. Take into consideration not only the VDI capacity requirements, but also that the storage can be shared with other business applications such as databases, email and user home directories. From a management perspective, it is more efficient to get all the capacity requirements from a single storage array

Dramatically Improve Performance

Nexsan has developed unique and high performance hybrid FASTier caching technology to accelerate the performance of underlying spinning disks. Most, if not all, storage systems provide some caching techniques that improve performance. Typically, storage controllers have a memory pool that stores data temporarily then purges it as the memory pool fills up. A number of techniques are used such as storing recent data into memory, keeping track of data that is frequently accessed, algorithms that monitor blocks of data that are typically requested together and pre-fetches that data into cache before the application requests it.

Obviously, the greater the capacity of the cache memory pool, the higher the performance. Keep in mind, the point is about achieving optimal price/ performance. The cost of DRAM and NVRAM is higher than SSD and far greater than HDD. Therefore, most storage controllers minimize the size of their cache memory pool.

With FASTier, you are no longer cache limited. FASTier allows you to cost effectively scale your total cache pool up to 9TB with high performance memory and flash technology.

Alternate approaches can be costly

It can be very challenging to ensure data protection for files that reside in remote or branch offices, which often have few or no onsite IT personnel. A simple, cost-effective solution is to deploy an Assureon secure archive at the organization’s headquarters, complemented by an Assureon Edge in each remote office (providing NFS and CIFS shares).

All data stored on each branch office Assureon Edge is securely transmitted to the Assureon archive storage system at headquarters, where it will be archived. Alternatively, an Assureon Client can be installed on branch office Windows servers; the client archives selected directories and files by transmitting them to the Assureon system at the organization’s headquarters.

Purpose-Built for Cloud Storage

Automated Tiering

Automated tiering is a policy-based process that monitors how frequently data is accessed. Based on this single criterion, it determines where to store data and moves data between tiers based on set policies. The theory is that frequently accessed data should be stored on fast storage, while less frequently accessed data should stay on slower tiers of storage. Application performance requires real-time monitoring and matching I/O requirements to those demands.

VDI environments are characterized by real-time performance demands and automated tiering was not designed to improve real-time workloads. For example, boot storms happen in real-time and therefore must be addressed in real-time. Trying to use automated tiering to address these demands would suggest placing VDI master images on the expensive high performance storage tier, dramatically increasing the cost of the VDI deployment.

All-Flash Storage

With storage performance requirements increasing at a steady pace, the industry has recently turned to solid-state storage as an answer to all performance problems. However, buyer beware. While an all-SSD system can offer unprecedented performance, the associated cost and limited capacities can restrict what applications can actually benefit from it.

In particular for VDI deployments utilizing an all-flash storage system is not practical because the price is simply too high, and capacities are far too low. Even though the cost of SSD has come down, it still comes down to achieving a positive ROI.

To summarize, a complete VDI solution requires more than just storage, but storage is the heart of where your data lives. Higher availability and improved worker productivity requires the storage system to meet the performance and capacity demand at a reasonable cost. NST can address these demands and challenges by enhancing your performance with FASTier technology and scaling capacity to grow with your business.